Teaching machines to make your life easier – quality work on Wikidata

WMDE allgemein

2. January 2016

A post by Amir Sarabadani and Aaron Halfaker

Today we want to talk about a new web service for supporting quality control work in Wikidata. The Objective Revision Evaluation Service (ORES) is an artificial intelligence web service that will help Wikidata editors perform basic quality control work more efficiently. ORES predicts which edits will need to be reverted. This service is used on other wikis to support quality control work. Now, Wikidata editors will get to reap the benefits as well.

Today we want to talk about a new web service for supporting quality control work in Wikidata. The Objective Revision Evaluation Service (ORES) is an artificial intelligence web service that will help Wikidata editors perform basic quality control work more efficiently. ORES predicts which edits will need to be reverted. This service is used on other wikis to support quality control work. Now, Wikidata editors will get to reap the benefits as well.

Making quality control work efficient

In the last month, Wikidata averaged ~200K edits per day from (apparently) human editors. That is about 2 edits per second. Assuming that each edit takes 5 seconds to review (which is quite fast for MediaWiki to even load a diff page), that means we would need to spend ~277 hours every day just reviewing incoming edits. Needless to say, this does not really happen and that is a problem since vandalism sticks until someone notices it.

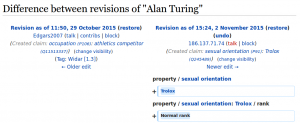

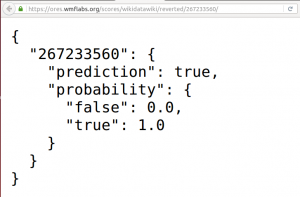

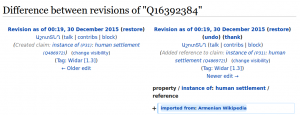

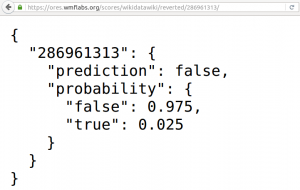

An automated scoring system like ORES can dramatically reduce that workload. For example ORES gives you a score for a revision indicating the probability of it being vandalism. This edit for example scored 51% which means it is probably okay but this edit scored 100% which means it is probably vandalism (and it is vandalism). Through this simple web interface, ORES makes it easy to tap into a high-power artificial intelligence that is trained to detect damaging edits.

We can use these predictions to sort edits by the probability that they are damaging. This allows recent changes patrollers to focus their attention on the most problematic edits. By focusing on the 1% highest scored edits, we can catch nearly 100% of vandalism — reducing the amount of time and attention that patrollers need to spend reviewing the recent changes feed (even with bots excluded) by 99%. You can read more in this Phabricator task. This kind of efficiency improvement is exactly what Wikidata needs in order to operate at scale.

A note of caution: These machine learning models will help find damaging edits, but they are inherently imperfect. They will make mistakes. Please use caution and keep this in mind. We want to learn from these mistakes to make the system better and to reduce the biases that have been learned by the models. When we first deployed ORES, we asked editors to help us flag mistakes. Past reports have helped us improve the accuracy of the model substantially.

How to use ORES

ORES is a web API which means it results are more suitable for tools than humans. You give ORES a revision ID and ORES responds with a prediction. Right now, ORES knows how to predict whether a Wikidata edit will need to be “reverted”. You ask ORES to make a prediction by placing the revision ID to score in the address bar of your browser. For example:

The are already some tools available for Wikidata that integrate ORES with the user interface. We recommend trying the ScoredRevisions gadget. We hope that, by posting this blog, more tool devs will learn of ORES and start experimenting with the service.

Not just quality control

We encourage you to think creatively about how you use the signal that ORES provides. As we have said, damage detection scores can be used to highlight the worst, but the scores can also be used to highlight the best. Recent research shows how we can flip the damaging predictions upside down to detect good-faith newcomers and direct them towards support & training (Halfaker, Aaron; et al. “Snuggle: Designing for efficient socialization and ideological critique” (PDF). ACM CHI 2014). While newcomer retention on Wikidata is still high compared to the large Wikipedias, we’re following a similar trend. Since Wikidata is still a young Wikimedia project, we have an opportunity to do better during this critical stage by making sure that we both efficiently revert vandalism and route good-faith newcomers to support and training.

Getting involved

There are many ways in which you can contribute to the project. Please see our project documentation, contact information, and IRC chatroom. You can also subscribe to our mailing list.

There are several tasks that directly help Wikidata.Also by reporting mistakes of ORES you can help us improve it faster. You can help us by build training data by labeling edits as damaging or not in Wikidata:Edit labels. With your help, ORES will soon be able to differentiate good-faith damage from vandalism.

We are also currently looking for volunteers and collaborators with a variety of skills:

- UI developers (Javascript and HTML)

- Backend devs (Python, RDBMS, distributed systems)

- Social scientists (Bias detection, observing socio-dynamic effects)

- Modelers (Computer science, stats or math)

- Translators (bring revscoring to your wiki by helping us translate and communicate about the project)

- Labelers (help us train new models by labeling edits/articles)

- Wiki tool developers (use ORES to build what you think Wikipedians need)